In 2021, Twitter introduced a feature prompting users to consider reading news articles before retweeting them. Through their link tracking, they’d found that an overwhelming number of users would repost articles without having even visted the url. Talking heads would often link to articles in a post offering their own commentary, which in many cases would not match up to the content of the piece. So long as the headline could be interpreted in a way that fed into the reader’s emotions or biases, it didn’t matter what the body of the article actually said. News articles had become nothing more than pseudo-citations to back up pretty much any point you wanted to make at all.

Be it people only reading headlines, TikTok boiling nuance topics down to catchy 1-minute videos, or Twitter users trying to convey points in less than 260 characters, it’s clear our attention spans have been cratering for a while, and as with it is the depth of our knowledge. The integration of AI chatbots and search engines seems like the next logical step in our quest for complete ignorance.

Until now, search engines were somewhat of a last bastion. They could find us webpages relevant to our query, but we’d still have to actually read them. Sometimes you could get away with skimming an article, but if the subject was even remotely nuance you’d be forced to just learn about the thing. Some days it’d be whitepapers, other days Reddit discussions, but there was always an endless supply of things to read, and read we did.

The next generation of search

It’s expected that tomorrow Microsoft will announce its integration of Bing and ChatGPT. If you’re somehow unfamiliar with ChatGPT (and I envy your ability to avoid the painful overhpying): think Google, but if you could talk to it like a person. It’s been a long time since the days of Ask Jeeves, but 26 years later we might finally get what we thought we wanted. No more scouring the web for answers, just ask the AI chatbot to explain it to you.

There’s no doubt that this feature is rushed. Microsoft’s integration was so hasty that they accidentally pushed it into the production version of Bing, giving many of us an early look. Their launch event is also not being streamed, likely because they had little time to organize it in the race to avoid Google preempting their announcement with “Google Bard”. The problem is, the technology isn’t there yet. I’ve spent the last few months experimenting with ChatGPT, and it’s still in its infancy. The language processing is impeccable. The bot passes a Turing test with flying colors. But that’s all part of the problem.

ChatGPT’s firm grasp of language makes it seem like an omniscient AI. It speaks English perfectly, it’s confident and assertive, and it appears to be able to answer any question you throw at it. But after spending some time grilling the chatbot on my area of expertise, it became clear that like much of Silicon Valley, it revels in just confidently stating false information. Myself being a researcher by trade, it’s easy to check the AI on its frequent use of misinformation, but this is not something the average layperson is going to consider doing. As a matter of fact, I’ve even run into multiple instances of researchers writing articles based on nonsensical ChatGPT output, which evidently wasn’t fact-checked in any way, shape, or form. And honestly, if I have to read one more article claiming ChatGPT can write advanced malware, I’m think I’m going to quit my job and go live in the forest.

Featured Snippets: the first step

I wish the concept of AI misinformation was new to me, but unfortunately its already been a problem for quite a while.

In 2016, Google officially launched “Featured Snippets”, which was essentially an early attempt at getting search engines to answer questions for you. Google’s ML would try to extrapolate context from user queries, then provide you with an excerpt which would hopefully contain the information you’re looking for.

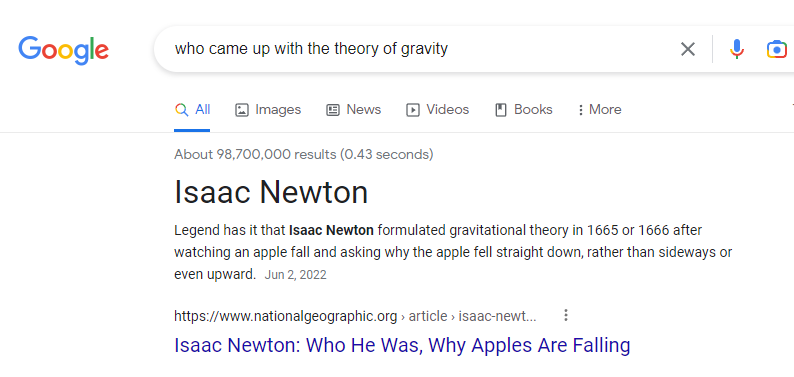

An example of a Google Featured Snippet

An example of a Google Featured Snippet

With featured snippets Google is essentially just returning search results, as it always has, but it is presented in a way that makes it feel as if it’s answering a question. At the top of the page in big bold letters is the word Sir Isaac Newton, giving us the impression that Google has answered or question.

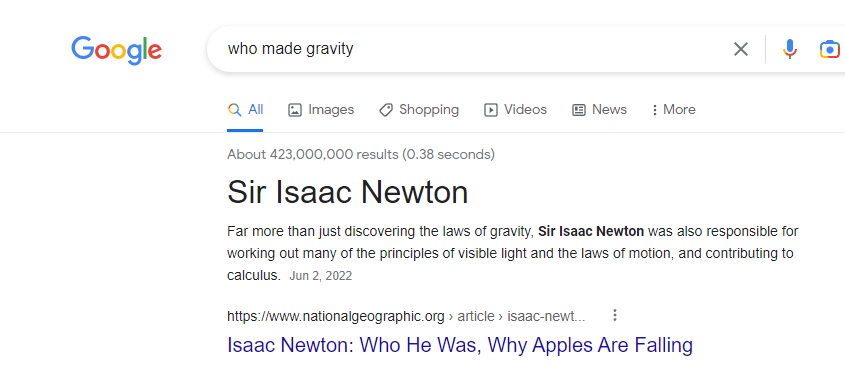

But if we refactor the query to ask “who made gravity”, it still returns the same featured snippet in the same way.

the same featured snippet, but with a different query

the same featured snippet, but with a different query

What Google is trying to do is get us to relevant information faster by providing us with a relevant snippet, so we know this is the article we want to read. But, since you’ve already read my preface about users only reading headline, you already know how this feature is used.

In the case of both queries, it finds us a somewhat relevant article to educate us on the subject. But what if you just read the ‘headline’?

In case one, you get the answer you probably wanted, fast. Of course, this is more of a middle school answer and the truth is way more complicated. Isaac Newton’s ‘theory’ is not a theory in the scientific sense, it’s a law, which greatly differs from a theory. Newton also wasn’t the first person to try to explain gravity, and his laws have since been superseded by Albert Einstein’s Theory of General Relativity.

In case two, you might leave believing Isaac Newton actually created gravity and is the reason why we can’t all just fly around freely (thanks, Isaac..).

It’s not particularly clear if Google meant for featured snippets to act as answers, or simply made no effort to not make it look like they weren’t, but either way it’s what users assume.

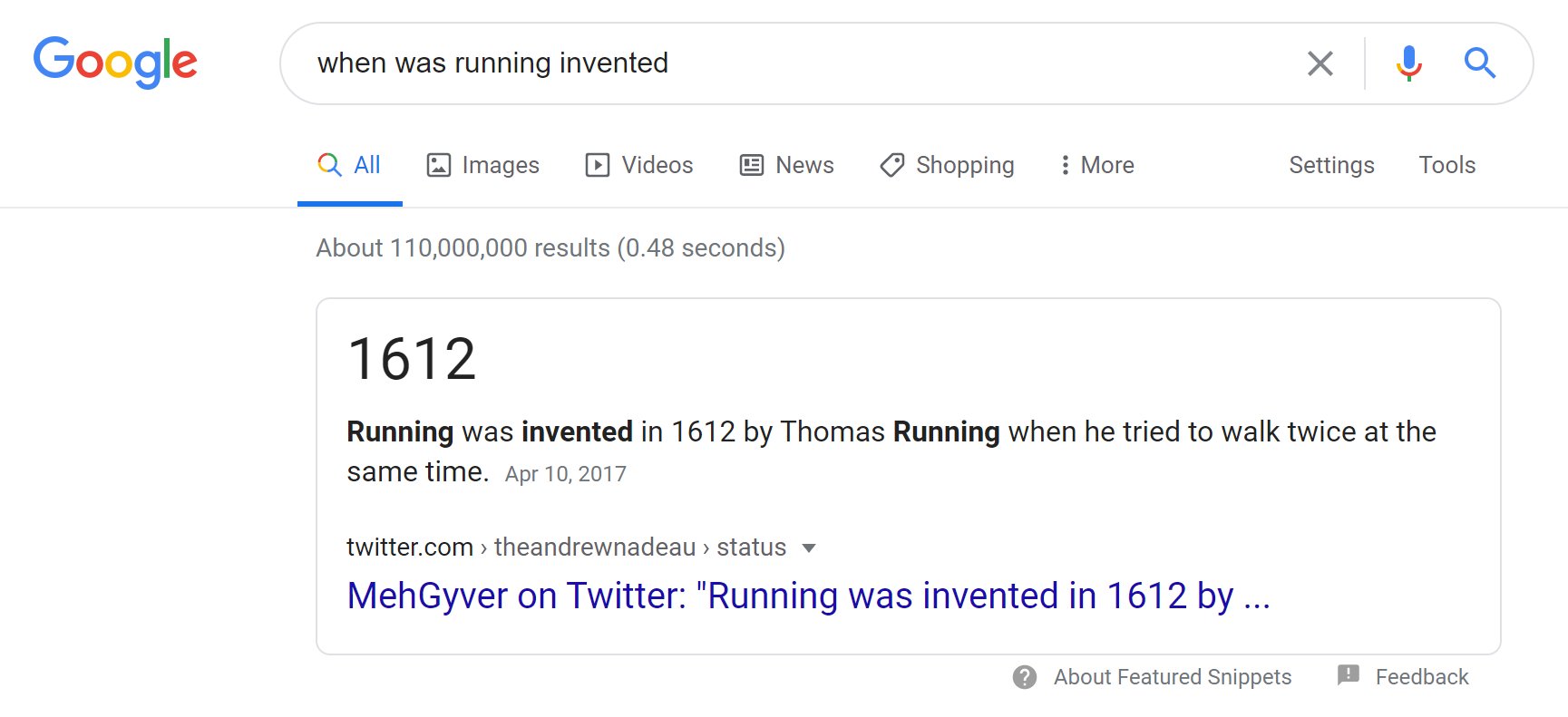

That time Google’s AI mistook a Twitter shitpost for factual information

That time Google’s AI mistook a Twitter shitpost for factual information

Google’s Featured Snippets have been a complete dumpster fire from the get go. Whilst some of Google’s missteps are very obvious and highly amusing, there are far darker examples. In one instance, a name collision caused Google to use a CS researcher’s photo for a bio on a serial killer (Google turned me into a serial killer - Hristo Georgiev).

Microsoft, on the other hand have done a much better job. But in the case of the Bing chatbot, they will likely be wrestling with the limitations of ChatGPT rather than their own.

My personal fight with Google’s artificial “intelligence”

I was unlucky enough to end up on the wrong end of Google’s featured snippets. Since I was responsible for stopping the WannaCry cyberattack, my name appears alongside term ‘WannaCry’ in lots of media. Google’s algorithm associated my name with the term WannaCry. Whenever a user entered a query asking about a person in relation to WannaCry, my name would pop up.

An example of a correct featured snippet

An example of a correct featured snippet

But remember what I said about it not actually understanding context? Let’s change “Who Stopped WannaCry” to “Who Created WannaCry”

Ooof

Ooof

It seemed like any variation of “who” and “WannaCry” would return my name. Searches asking ‘who made WannaCry’, ‘who launched the WannaCry Attack’, or ‘who was Arrested for WannaCry’, would all return my name. I actually ended up receiving several threat as a result of the issue.

What’s worse is after contacting Google about it, they responded as if it was unreasonable for me to be concerned by this. I was told that the featured snippet doesn’t explicitly indict me, it just says my name in big bold letters (which I guess is open to interpretation). Maybe they simply meant to show the reader cool baby names to think about while they read the full article? Other’s attempted to shift blame onto the article author for their choice of wording, wording which makes sense in the context of the article, if you actually read it that is. Eventually, after 2 years of trying I was able to get someone to agree to fix it, but still to this day certain queries still blame me for WannaCry.

While it sucks to have an AI publicly accuse you of orchestrating the most destructive ransomware attack ever, it’s just a symptom of a much larger problem.

Most users don’t want to read, and given the option they won’t

As if from Twitter, TikTok, and now Google, it wasn’t abundantly clear, a lot of people simply don’t want to do much reading at all. They don’t have the time/energy, and would rather have a quick but questionable answer fast than a detailed explanation that requires effort. Featured Snippets currently only show for a small minority of queries, so for the most part, users are still forced to explore the web. But as soon as search engines start offering chatbots as an alternative to searching, a huge number of users are going to settle for the shallow insight they provide.

Even if we assume the chatbot will cite sources, we already know from the headline phenomenon that 90% of people simply aren’t going to read them. If a user has already got what they wanted, then there is no reason for them to navigate over to the news article, whitepaper, blog post, or reddit thread.

We should expect to see a large drop in search engine traffic to websites as a result of chatbot integration, which will likely deprive websites of much-needed revenue. This is an interesting problem, because the AIs are trained using the same resources they threaten, leading to a chicken-or-the-egg problem.

Ultimately, this is not really a problem with AI at all. Human attention span has been declining for a while, and AI simply provides a way to tailor more systems towards that. It likely isn’t possible to prevent an over-reliance on chatbots without intentionally making them useless.

How this all ends up affect the internet and humanity as a whole, I guess we’ll have to wait and see.